The Kira Talent Blog

Breaking down bias in admissions

The how-to guide to reducing admissions bias at your school

Download ebook

THE ADMISSIONS ROUNDUP

Must-read articles in the admissions space, delivered straight to your inbox.

Case Studies · 3 minute read

Crystal gains more authentic insight into prospective families with an engaging enrollment interview

Product · 3 minute read

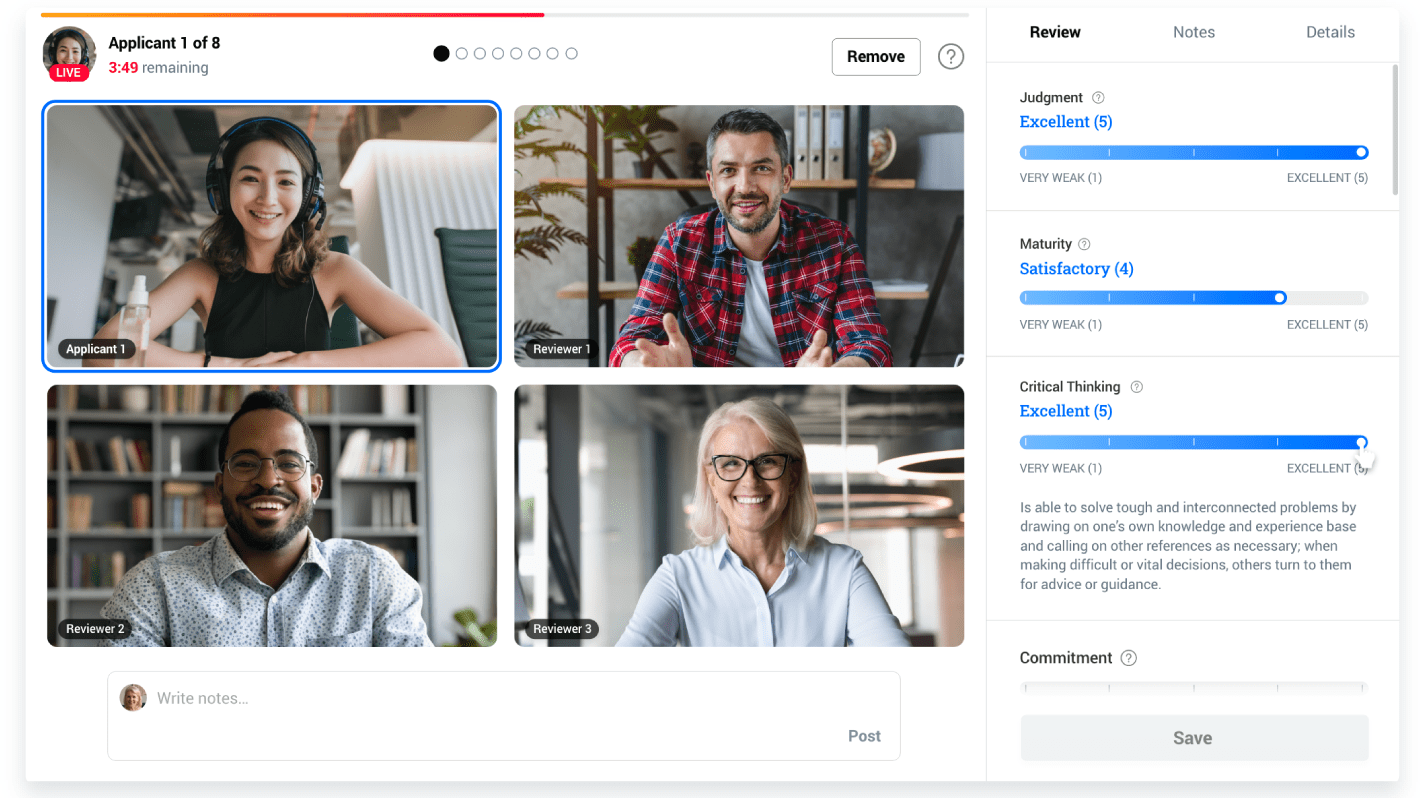

Quarterly Product Digest: April 2024

Case Studies · 6 minute read

UTMB John Sealy School of Medicine moves their MMI from Zoom to Kira, getting early offers out to applicants faster

Case Studies · 5 minute read

The University of Surrey saves 350+ hours with more efficient credibility interviews

Reports and Data · 7 minute read

Kira Talent Client Experience Report 2023/24

Case Studies · 5 minute read

Yale SOM celebrates ten years of efficient and effective holistic review with Kira Talent

Reports and Data · 9 minute read

Kira Applicant Experience Report 2023

Product · 6 minute read

The 2023 Product Digest

Case Studies · 4 minute read