During my summer internship at Kira Talent, I had the opportunity to hear Dr. Ann Cavoukian speak on the topic of Privacy by Design in an Era of Artificial Intelligence at The Michener Institute of Education at University Health Network.

Dr. Cavoukian is Ontario’s longest standing Information and Privacy Commissioner, having served for three terms – 17 years after her appointment in 1997. Cavoukian’s background in psychology brought a successful new mindset and focus to the prevention of privacy issues within the Commissioner role.

Cavoukian is also well known for developing Privacy by Design, “A framework that seeks to proactively embed privacy into the design specifications of information technologies, networked infrastructure, and business practices, thereby achieving the strongest protection possible.”

Growing up as a millennial and, now as an undergraduate student at a Canadian university, I was curious to learn more about the relationship between privacy and technology. I’ve often wondered how my data is stored and protected, shared with other parties internally and externally, handled once I’ve graduated, and how this data could be used to everyone’s advantage.

Why is Privacy Important

“People want privacy more than ever,” Cavoukian insisted during her talk.

Public opinion polls consistently find that at least 90% of the population is very concerned about privacy, and their loss of control over their personal information, but don’t know how to regain control.

I’ve had a professor comment that my university’s course management system has become more and more invasive with each update. It tracks and surveils students’ online activity, such as when we sign on or off, all of our communication, what specific web pages, links, documents we view and download, the amount of time we spend on each web page, etc.

I wasn’t too surprised to hear this as it seems almost expected given how much we hear about surveillance and tracking. It did, however, make me more interested in my data security and the proactive measures I should be taking.

“Privacy is not about having something to hide … it’s about control and forms the foundation of our freedom” Cavoukian noted.

Privacy equals personal control. And with control, comes freedom of choice; data subjects, much like the students forced to use their university’s course management system, should be able to decide what information they would like shared, and to what extent. They deserve transparency, and a right to access as it is their information after all.

“Only the individual knows what is relevant and sensitive to them,” affirmed Cavoukian, and personal preferences vary between individuals.

Cavoukian said that Privacy by Design will truly benefit companies and organizations as well, because users “are the ones who will keep the information accurate and are the only ones who know, that what you have on file, is actually correct,” thus creating better-quality data.

Positive-Sum Models: The Power of “And”

A common concern that comes up when discussing privacy is the idea of having multiple competing interests at the same time, where privacy is typically sacrificed to advance the other interest.

“This either-or, win-lose model is so destructive. It’s never privacy that wins. So the model we’ve advanced [with Privacy by Design] is called positive sum. Positive sum is where you can have two positive gains in two areas at the same time. So it becomes a win-win proposition” explained Cavoukian.

In the positive-sum model, universities would still be able to track important student data to create systems and supports to better serve students, but students would be made well aware of how their data was being collected and used.

Privacy by Design Framework

“The Seven Foundational Principles of Privacy by Design create the path forward to a world in which privacy and technology can not only co-exist but can be enhanced.” To Cavoukian “Privacy by Design is as simple and as difficult as the seven principles”; it is an enabling model. She sees it as an opportunity for creativity, innovation, and expanding models.

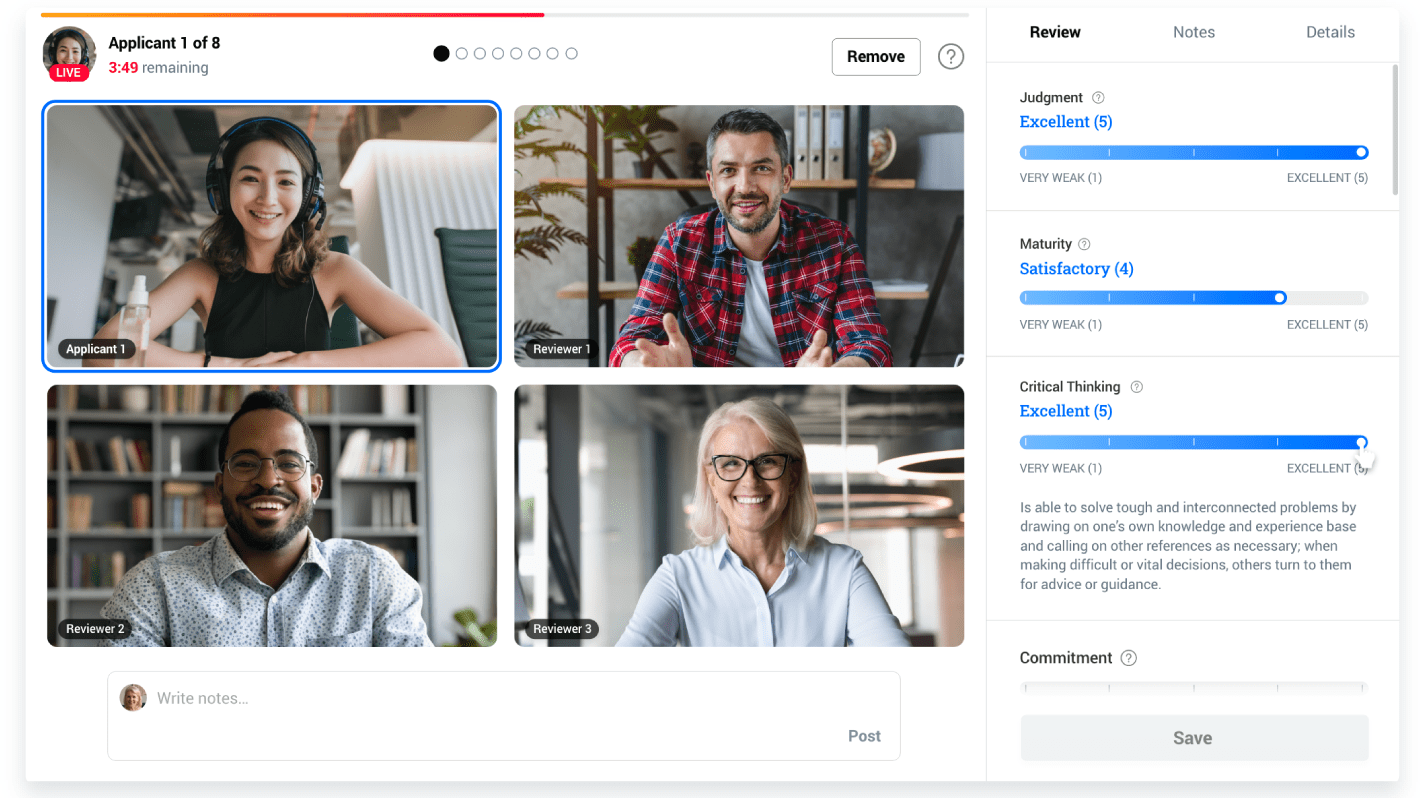

At Kira Talent, we have adopted the Privacy by Design foundational principles across our product and organizational policies, which includes adopting a regular review and certification process for our privacy policies.

It is important to note that privacy protection is better if it’s proactively set up at the beginning, hence the name Privacy by Design.

“It is much easier and far more cost-effective to build in privacy and security, up-front, rather than after-the-fact, reflecting the most ethical treatment of personal data,” claimed Cavoukian.

Privacy and Data Utility

Where do we see a great example of Privacy by Design truly doing good?

Cavoukian shared an example of Intel’s work with aging adults regarding remote home health care: “With the demographics of our aging population, there are a lot of single elderly people who want to live in their homes but they might need some help from time to time.”

Intel would attach sensors to an individual’s bed so that if they got up in the middle of the night to use the restroom and didn’t return within a preset period of time an alert would go out wirelessly and help would come.

Because of the positive consent of the individual, this is a great example of using technology for good.

“It enables people to stay in their homes longer and yet get the help they need when they need it,” explained Cavoukian.“That’s the And. That’s the Win-Win. Privacy and data utility, you have to have both and you can have both, and make it work for you.”

Being a student today, there is an interesting future ahead of us where we can take Cavoukian’s framework and work towards increasing student services. By tracking information about incoming students, their engagement on campus, and how well they do in their courses, schools could create personalized study plans and counseling services backed by that student's own data, their own movements, and passions while on campus. All with the students’ full positive consent of course.

Why Now?

Schools need to be thinking about Privacy by Design now. Not only because students and parents are beginning to question how their data is used, but because of legal policies like the General Data Protection Regulation in the EU.

“In 2010, [Commissioners have] unanimously passed a resolution that regulatory compliance was no longer sufficient as the sole model of ensuring the future of privacy” shared Cavoukian. She went on to add that, “the time is ripe for consideration of privacy and ethical issues. We are at a very solid time in terms of interest in privacy and data protection. There is a call for algorithmic transparency; there is danger in taking algorithms without skepticism, in the context of unconscious bias.”

On May 25, the European Union enforced a new law, the General Data Protection Regulation (GDPR), where privacy is automatically incorporated. EU citizens no longer have to search for privacy regulations in regards to their personal data. “Privacy by Design is included in the law … Privacy as the default” noted Cavoukian.

With the introduction of the GDPR, we are moving “from a model of negative consent to a model of positive consent, to a model where you’re going to automatically have privacy” explained Cavoukian. In fact, Ben Rossi at Information Age, a leading UK business technology magazine wrote: “It’s not too much of a stretch to say that if you implement Privacy by Design, you’ve mastered the GDPR.”

I believe my generation, and all generations following, need privacy more than ever as we’ve grown up having our data on the web. We have the most information floating around about us and that information is going to be powering machine learning and artificial intelligence technologies in the future.

Looking Ahead: Privacy, Machine Learning, and Artificial Intelligence

So how does privacy affect development in Machine Learning and Artificial Intelligence (AI)?

“AI builds on existing data and scours millions of millions of data sets and extracts information and puts together models, all of which is very good and valuable. But the issue is, how do the algorithms work?” pointed out Cavoukian.

She added on that “algorithms aren’t neutral. We should be questioning and analyzing what the algorithm is giving us and what we are feeding it. There is a need to improve validity.”

Algorithms can solve problems for us and they can save us time. However, depending on what data they are fed, they can also be accidentally discriminatory.

Algorithms can and have led to false analyses. Remember the Beauty.AI contest in 2016 where robots ranked models with lighter skin tones over models with darker skin tones?

And again earlier this year, a ProPublica investigation found that software used to predict future criminals was biased against black defendants, leading to harsher sentencing. One major reason for these instances is the fact that minority groups are underrepresented within datasets, meaning algorithms can reach inaccurate conclusions that their creators wouldn’t intend or even detect.

Fortunately, experts in this field are taking a deeper and more critical look “under the hood” of algorithms.

We need to be able to use data to inform our decisions, but not allow it to be the endpoint.

Thank you for sharing your knowledge and time with us Dr. Cavoukian! And thank you for being such an advocate for personal choice and control. I feel a new urgency in asking my educators, administrators, and even my colleagues, about my data. I look forward to seeing how these privacy changes take shape in the next few years to come.