At graduate schools around the world, the admissions interview is the make it or break it stage for an applicant. It’s often the most authentic snapshot of an applicant that admissions teams get before making a decision.

With so much riding on the interview, you may be surprised to find your current interviews are not as effective as you think.

Why? Your interview structure.

A structured interview involves each applicant responding to similar questions in similar styles ensuring that each applicant walks away having had a consistent experience.

On the other hand, an unstructured interview is a more casual conversation. Questions flow out of the discussion in an unrehearsed way, and the applicant’s experiences or career history may guide the conversation.

Many interviewers prefer unstructured interviews because they feel comfortable and authentic, and that these conversation-like interviews give them a better chance to get to know an applicant based on their ‘gut reaction’ or intuition. In fact, anywhere from 85% to 97% of professionals rely to some degree on intuition when making a decision on a candidate.

While we may have learned to ‘trust our gut’ growing up, unfortunately, the results don’t tell the same story. There is a massive difference in the predictive validity of these two interview types. Unstructured interviews have been found to have a predictive validity of only 31%, compared to 62% for structured interviews.

That's right: Structured interviews were found to be twice as predictive as unstructured interviews.

Not only are unstructured interviews far more susceptible to factors like cognitive biases, reviewer fatigue, and burnout, but they also inherently create an unfair and unbalanced interview experience for applicants.

In our 2016 survey of 145 schools, only 45% of admissions teams said they interview applicants with a standard set of questions and 60% had standard evaluation criteria. Fortunately, by setting some standard practices with your review committee to ensure consistency, you can achieve much more predictive results from your interviews.

Developing structured admissions interview criteria

A structured interview requires consistency at its core, including:

- The same number of questions

- The same questions or the same type of questions (using a template would help diversify questions that would evaluate the same trait.)

- The same evaluation method, such as a rubric or rating scale.

By building your interviews with these processes in place, each applicant is given a fair shot to succeed in the interview and each reviewer has the tools in place for a successful evaluation. It also helps prevents things like distracted segues on shared interests from influencing the ultimate decision to accept or pass on admission.

That’s not to say that question probing is wrong when it comes to conducting structured interviews. Probing into answers can help uncover the best responses from applicants, however like the initial interview questions, schools should plan a series of ‘approved probing questions’ and how deeply a reviewer can probe. Reviewers who have developed a bias for an applicant, for example, may probe deeper to unconsciously give that applicant a better chance of improving his or her score.

As you can imagine, interviewer training and accountability is important to ensure the structure of the interview is maintained. Establishing official questions and evaluation criteria will require more planning early on, but the improved quality and consistency of your admissions assessment is worth it.

Making admissions decisions based on structured interviews

If your school is conducting structured interviews, you can improve your outcomes even further.

There is recent evidence for standardizing not just interviews themselves, but the synthesis of interview results and feedback.

In 2014, researchers at the University of Minnesota found that a simple algorithm, when fed evaluation scores of multiple interviewers, beat human judgment on selecting the top applicant by at least 25%.

To help leverage these findings to improve decision-making, the researchers recommend to “have several managers independently weigh in on the final decision, and average their judgments.” When applied to admissions, this translates to several members of an admissions committee or large review team submitting their feedback to come to a decision.

It’s not only the most democratic way to consider reviewer feedback, but it’s also proven to be the most effective.

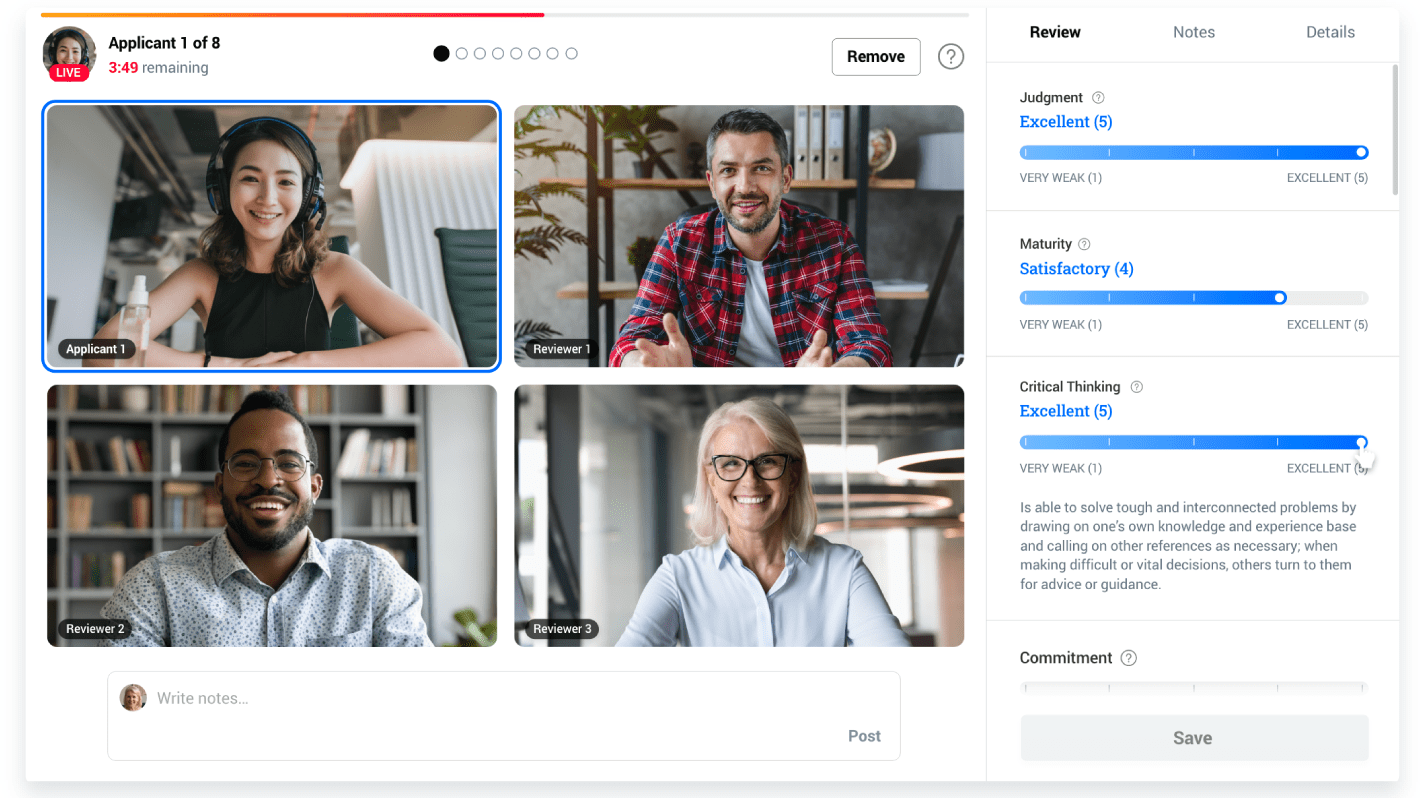

How we do this at Kira

At Kira, we’ve researched what makes the most effective interview because we want to help schools create that experience on our platform. Our team works with new clients to roll out structured digital interviews, including developing assessment criteria, questions, and evaluation rubrics for their timed video and written assessments to give applicants a consistent experience across the board.

Once interviews are submitted, multiple members of your team can review applicants, and submit their scores. Kira will synthesize that data and give you an overall average based on the feedback provided by your team.

If you’d like to learn more, book a demo today to see how it works!