The 2021-2022 admissions cycle brought yet another evolution to many application processes. With campuses re-opening, admissions leaders assessed how the newfound conveniences of online assessments would mesh with the reemergence of time-honoured admissions traditions. This newest cohort of applicants was the first to experience what’s likely to become the new normal for higher education admissions. And some were the first to try and find ways to game the new system.

At Virginia Commonwealth University, reports of academic misconduct more than tripled in 2021, with over 1000 reported instances of cheating. The University of Georgia also saw an increase in cheating with over 600 reported cases in the fall of 2021, more than double the 228 cases reported during the fall of 2019.

“The rapid shift to online learning provides different opportunities for misconduct,” shared Phill Dawson, Associate Professor & Associate Director of the Centre for Research in Assessment and Digital Learning (CRADLE) at Deakin University. “While a holistic approach to academic integrity is advocated, it is likely that gaps will emerge. We must be continually updating the toolkit of support and detection measures.”

Over the course of the COVID-19 pandemic, the use of online proctoring services skyrocketed, as schools rushed to find ways of validating applicant submissions. In May of 2020, only a few months into the pandemic, 54% of institutions were already using proctoring services with an additional 23% considering or planning to bring them on board.

At Kira Talent, we also invested in partnerships to help schools be proactive with fraud detection — starting in their admissions process.

In 2019, Kira teamed up with Turnitin, a global company dedicated to ensuring the integrity of education, to incorporate their industry-leading technology to help ensure that applicants were being authentically themselves in all assessments and supplemental materials submitted through Kira.

We took a closer look at this year's applicants to see how prevalent instances of text similarity were in this newest iteration of the admissions process and where it factored in the most.

Getting to know SimCheck and Turnitin

Over the past 20 years, Turnitin has processed submissions from over 40 million students and analyzed over 200 million submissions annually. With Kira, the company is now bringing that expertise from the classroom to the admissions office.

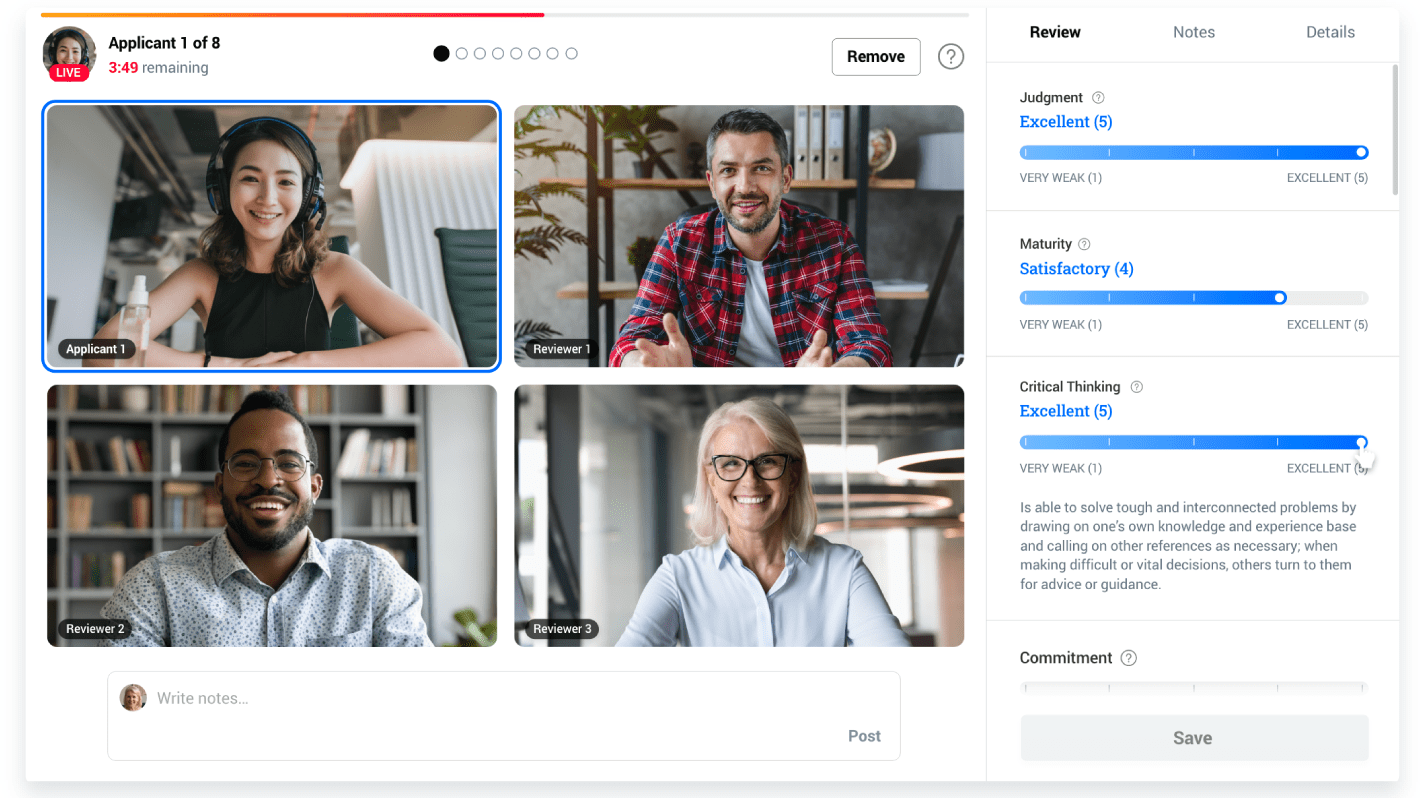

SimCheck in the Kira Talent platform eliminates the need to rely on reviewers for flagging suspicious material, leaving them free to focus on the substance of an essay. In this way, SimCheck provided admissions teams with an unbiased and non-intrusive method of text similarity detection.

And just how does this work? SimCheck automatically compares application materials against their ever-growing database of internet sources — an average of 22 million sources are added to the reference list each day — as well as the institution’s private database comprised of all materials ever submitted to Turnitin.

Within minutes the software cross-checks the submission with over 91 billion web pages.

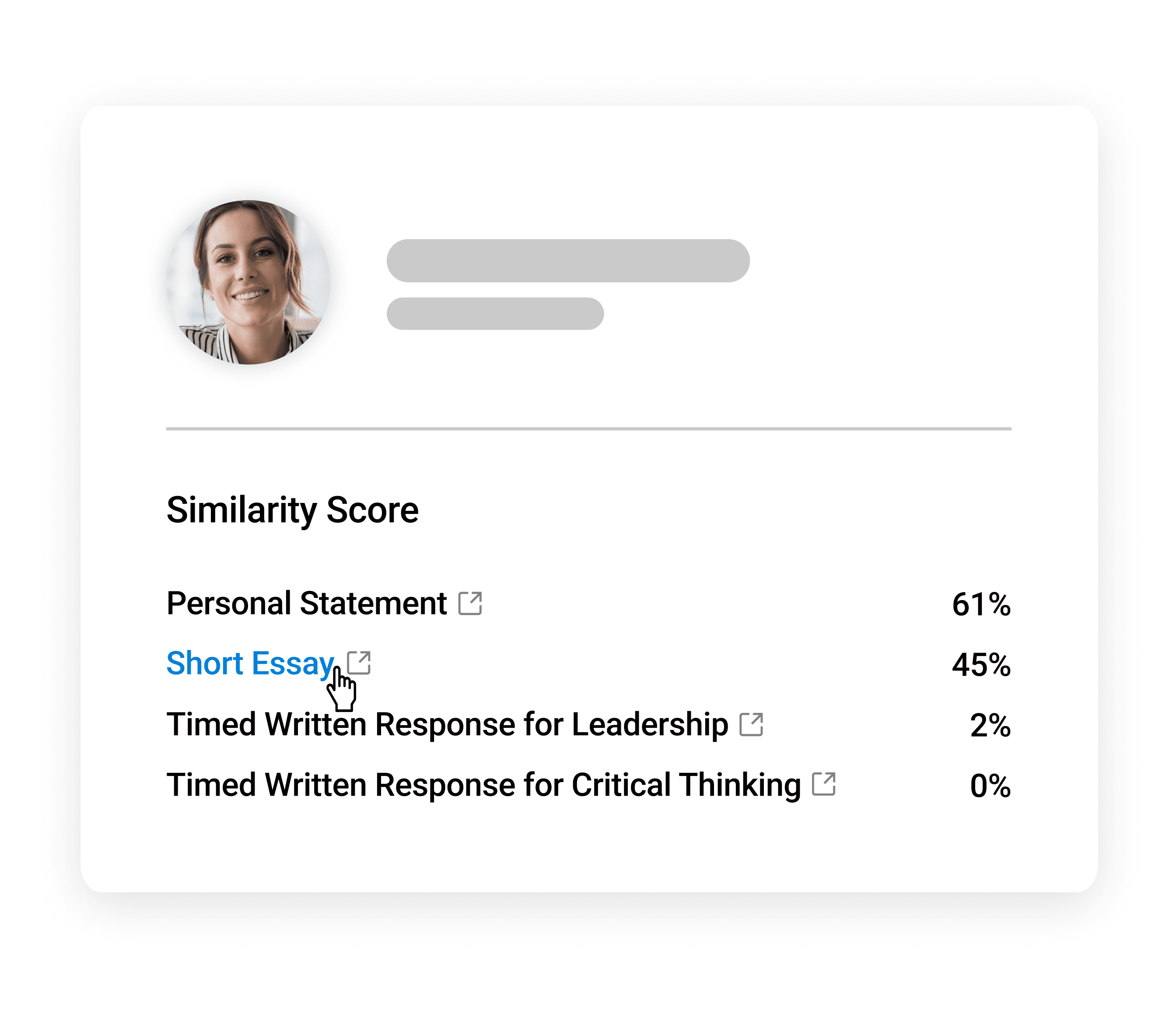

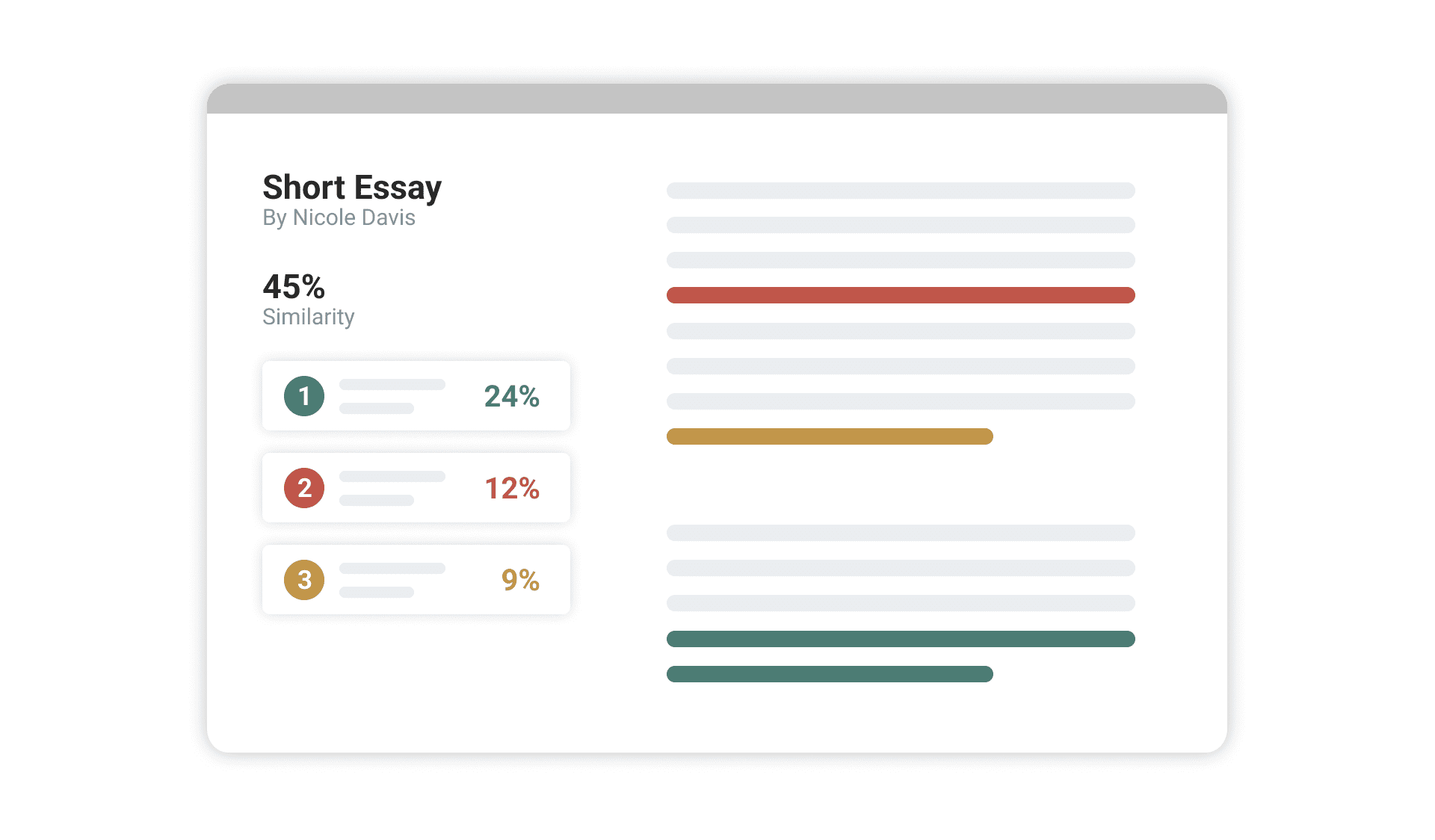

SimCheck then quickly highlights sections of text which have appeared elsewhere online or in other submissions and provides admissions teams with an overall Similarity Score — AKA the percentage of the paper's matches to other sources.

With text matches highlighted and colour-coded, admissions teams can quickly see where the applicant’s submissions bear similarities to existing materials and can assess whether they’re referencing that text or if they’re attempting to pass it off as their own.

Bringing fraud reporting to admissions

Since the invention of the internet, cases of plagiarism in higher education have skyrocketed. In a survey of 63,700 American undergraduate and 9,250 American graduate students, Donald McGabe of Rutgers University found that 36% of undergraduates and 24% of graduate students admit to copying from internet sources without footnoting the source. A further 7% of undergraduate and 3% of graduate students admit to turning in a paper written entirely by another person.

The attention surrounding fraud in college applications specifically has been rekindled by recent cheating scandals as well as the mass movement towards virtual assessment delivery and online application collection driven by the COVID-19 pandemic.

"There was likely increased cheating reported during the COVID-19 pandemic because there were more temptations and opportunities and stress and pressure, but also because faculty were able to detect it more," explained Tricia Bertram Gallant, who researches academic integrity at the University of California, San Diego.

"It's easier to catch in the virtual world than it is in the in-person world."

The Penn State Smeal College of Business discovered this back in 2009 when they noticed similar writing amongst essays submitted by several different applicants. Already using one of Turnitin’s solutions in their classrooms, they decided to use the detection software to help protect the integrity of their admissions process as well.

Since then, the school has found that between 4-9% of applications each year have evidence of unoriginal work.

Although only some cases make the headlines, each instance of plagiarism damages a school’s ranking and reputation, reducing the number and quality of applicants for future admissions cycles. By flagging potential cases of plagiarism in the application process, SimCheck helps admissions teams proactively monitor cheating early, proactively preventing fraudulent behaviour before it ever reaches the classroom.

To understand the historical context of unoriginal applicants, we dug a little deeper to see how cases of copied applications changed over the past year.

Digging into the data with Kira Talent

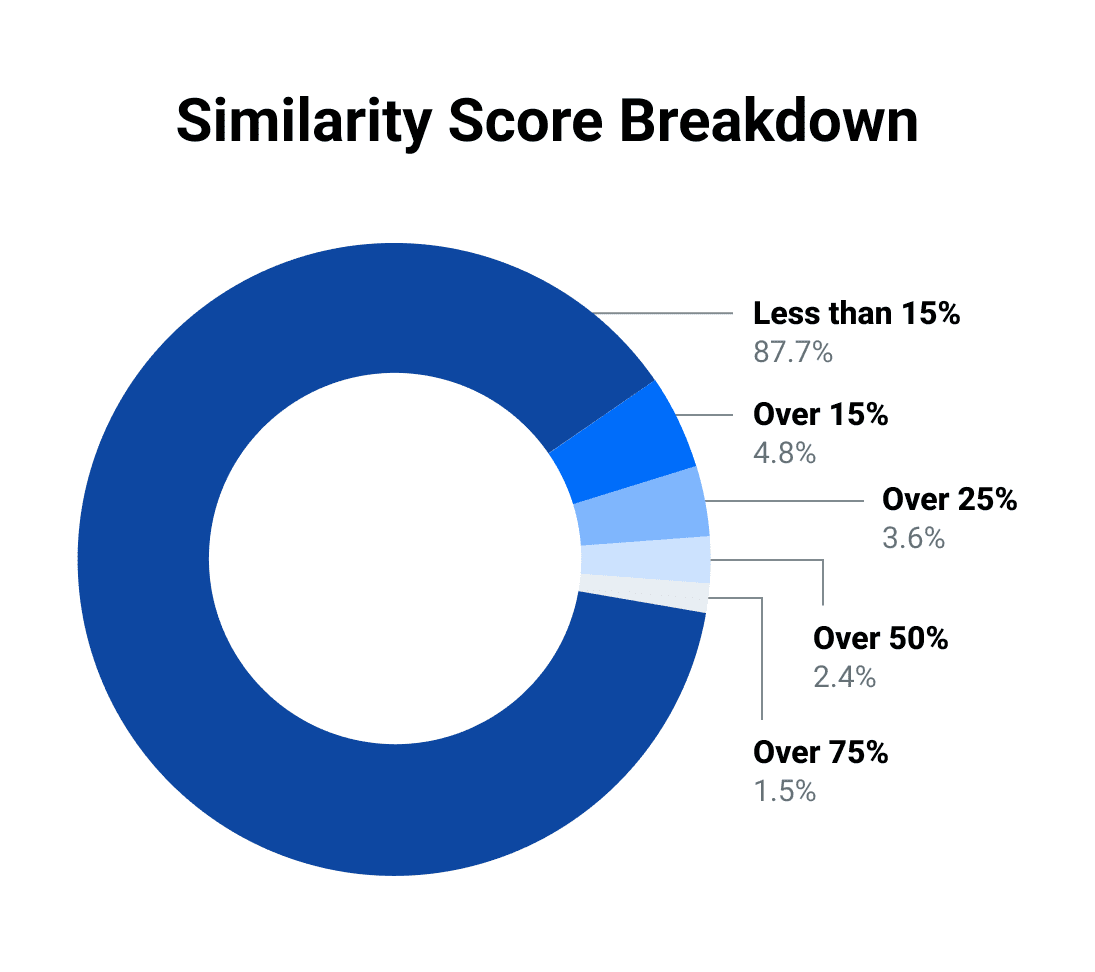

2.4% of applicant submissions had over 50% text similarity

Mirroring Virginia Commonwealth University’s findings, the percentage of applicant submissions that showed over 50% text similarity more than tripled this year, up from 0.71% during the 2020/2021 admissions cycle.

1.5% of applicant submissions had over 75% text similarity

While a Similarity Score of 50% or higher is concerning enough to be flagged by SimCheck, a similarity score of 75% or higher means almost all of a written assignment is copied from other works.

The percentage of applicant submissions that showed over 75% text similarity increased nearly 5X over the previous year.

Luckily for our partner schools, SimCheck was there to flag each of these cases, giving admissions teams and their reviewers the opportunity to investigate.

The average Similarity Score for the 2021/2022 admissions cycle was 3.12%

Although cases of potential application fraud surged this year, the data reiterated the fact that the majority of applicants are submitting their own, original work.

For busy admissions teams, this is where the true value in SimCheck is found. With so many honest applicants to connect with, reviewers shouldn’t be burdened with digging through each application looking for signs of plagiarism. With SimCheck running in the background, Kira alleviates the need for reviewers to be on high alert for the outlier applications, giving them more time time to focus on what matters most — getting to know their applicants.